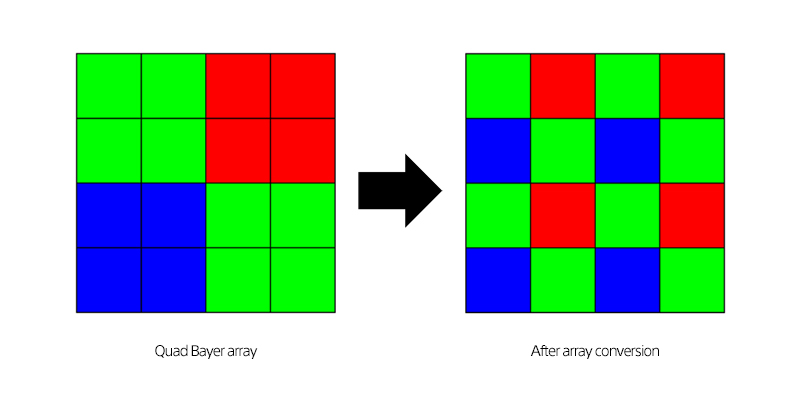

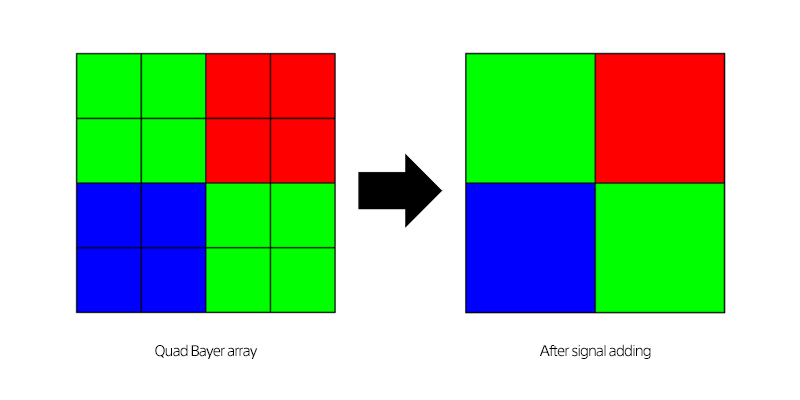

Image sensors, particularly the ones with tiny pixels like inside smartphones, have an on-chip micro-lens array. This micro-lens helps to help steer all the incoming light to the image diode of the sensor. And, until now, every pixel had its own lens. But Sony changed all of that when it invented a way for four neighboring pixels to share the same lens. So, when compared to the earlier tech, cameras should now be twice as accurate when searching for Focus. This system is possible courtesy of the newly-revealed Sony 2×2 On-Chip Lens Solution (OCL) for upcoming smartphone image sensors.

On the 10th of December this year, Sony revealed its first 2×2 On-Chip Lens (OCL) solution. The company describes it as “a new image sensor technology” for achieving Focus much faster, along with higher resolution combined with much higher light sensitivity and high dynamic range. Moreover, previously even the best Sony Quad Bayer sensors had individual on-chip lens for each and every pixel. But, this new invention changes that convention by using a single on-chip lens for each of the four neighboring pixel sets.

Pixel Structure | Sony 2×2 On-Chip Lens

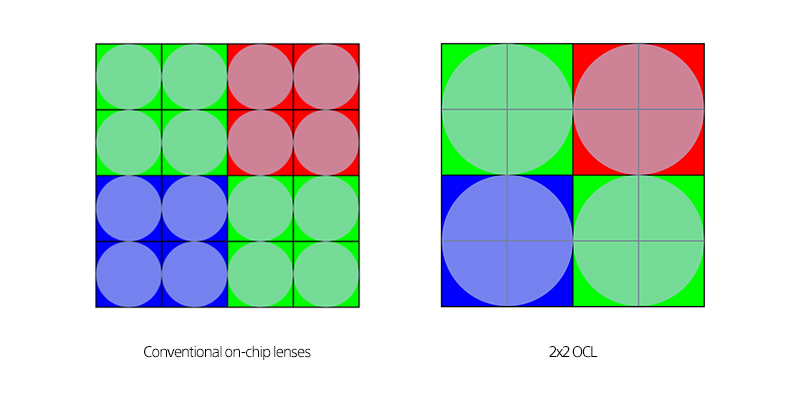

The condenser lens, also known as “on-chip lens,” is at a slot at the top of the image sensor pixels. Conventional on-chip lenses are in place on each pixel. However, in 2×2 OCL, four adjacent pixels with the same color share one on-chip glass.

The following benefits are realized:

- Phase differences can be detected across all pixels

- Improved phase difference detection performance (focus performance)

Phase Difference detection across the sensor | Sony 2×2 On-chip Lens

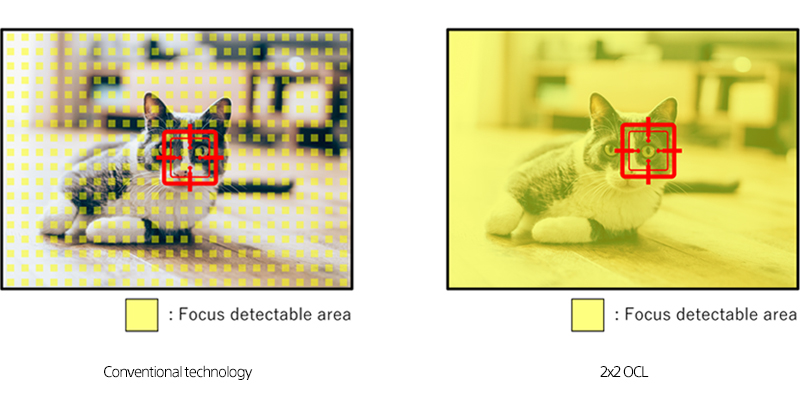

The primary benefit of such technical design and setup is – better autofocus performance, especially in low-light situations and environments. That is possible because, in the 2×2 OCL method, the imaging pixels can be used as detection pixels. As such, phase differences are detectable across the entire image sensor, which, as a result, means focusing on even tiny objects with higher precision.

Improved Focus in low-light environments

Since conventional PDAF lacks the pixels for lateral Focus, they weren’t good at the detection of objects that didn’t move vertically. However, the 2×2 OCL sensor solves that problem while additionally delivering higher speed autofocus using PDAF in every possible scenario.

Now, as far as when this is coming to consumers is not known, although Q2/Q3 2020 may be possible. And since this sensor was launch just a week back, Sony did not specify any launch dates or on which device this would feature first.

Do share your thoughts regarding the post in the comments section below. If you liked the content, please show your support by visiting and Following us on our Facebook and Twitter accounts.

(Source)

Mobile Arrival Smartphones and gadget reviews, news and more.

Mobile Arrival Smartphones and gadget reviews, news and more.

Well, I am just wondering if this new technology will have an mpact on low-light performance (and not only low-light Autofocus performance).

Indeed the next camera that is said to benefit from this technology is the rumored A7S IV, with a full-frame 15 MPixels sensor, so less pixels than the usual 24MP, but then bigger pixels to catch more light. HOWEVER, the pixel size is described as such: 7.52µm (3.76µm , 2X2 OnChipLens)

So wich size would be to consider as the actual one of a pixel, in your opinion? 7.52 or 3.76?

I would go with 3.76µm. Since, with the 2×2 On-Chip Lens Solution, that no. would imply because there’s effectively 3.76µm times 2 pixel size that equate to 7.52µm. The actual size of the pixel should always be the smaller number.

I’ve read a few good stuff here. Certainly price bookmarking for revisiting. I surprise how so much effort you put to create this type of excellent informative website.